EASE Milestones

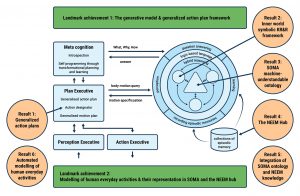

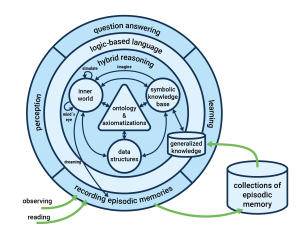

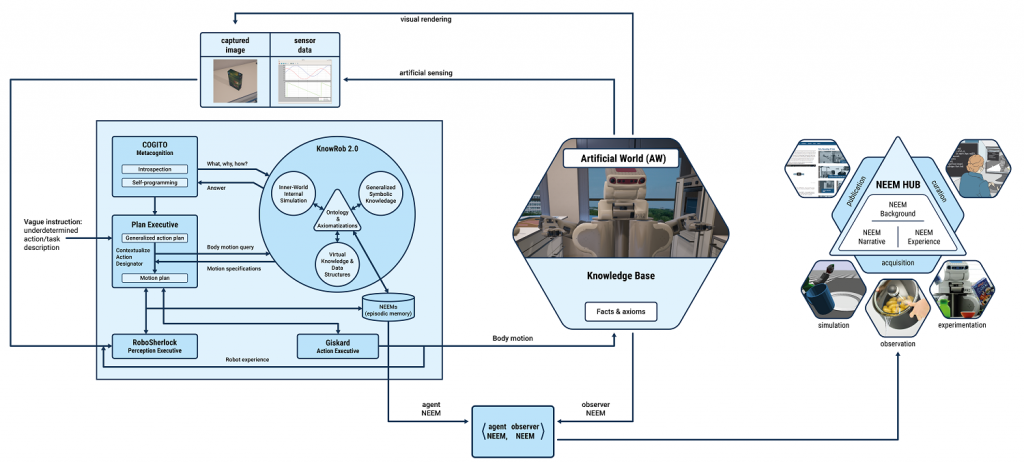

In the first phase of EASE we have proposed KnowRob2.0, a second generation KR&R (Knowledge Representation & Reasoning) framework for robot agents. KnowRob2.0 is an extension and partial redesign of KnowRob that provides the KR&R mechanisms needed to make informed decisions about how to parameterize motions in order to accomplish manipulation tasks.

The extensions and new capabilities include highly detailed symbolic/subsymbolic models of environments and robot experiences, visual reasoning, and simulation-based reasoning. Aspects of redesign include the provision of an interface layer that unifies heterogeneous representations through a uniform entity-centered logic-based knowledge query and retrieval language.

For further details, please go to http://knowrob.org/.

The systematic organization and formalization of background knowledge in EASE takes place in the EASE ontology of all EASE knowledge (and data structures & processes), which makes the key concepts, data structures, and processes machine-understandable. By being machine-understandable, we mean that the robot can answer queries that are formulated with the concepts and relations defined in the ontology using symbolic reasoning based on the axiomatizations of relevant EASE concepts in the ontology.

EASE employs a collection of ontologies within a very concise foundational or top-level ontology, called SOMA (Socio-physical model of activities). SOMA is a parsimonious extension of DUL (Dolce Upper Lite) ontology, where additional concepts and relations provide a deeper semantics of autonomous activities, objects, agents, and environments. SOMA has been complemented with various subontologies that provide background knowledge on everyday activity and robot and human activity including axiomatizations of NEEMs, 48 common models of actions, robots, affordances, execution failures, and so on.

For further details, please go to https://ease-crc.github.io/soma/.

The NEEM Hub is the data storage of robot agents that stores and manages NEEMs (Narrative-enabled episodic memories) and provides a software infrastructure for analyzing and learning from NEEMs. NEEMs are a way of storing the data generated by robot agents during everyday manipulation in such a way that it enables knowledge extraction. More formally, NEEMs are CRAM’s generalization of episodic memory — encapsulating subsymbolic experiential episodic data, motor control procedural data, and descriptive semantic annotation — and the accompanying mechanisms for acquiring them and learning from them in KnowRob.

For further details, read the NEEM-Handbook (PDF).

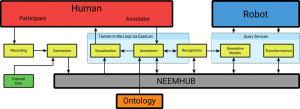

In area H of EASE, we developed the Human Activities Data Analysis Pipeline as our common unified framework for data capturing and processing which emerged from the close collaboration within Research Area H:

The pipeline outlines the flow of information between humans and robots with the NEEM-Hub as its central interconnecting component.

The Recording stage encompasses any kind of everyday activity data acquisition. To automate recordings of high-dimensional multimodal dataset, we implemented a software framework, which integrates a heterogeneous setup of sensors, actors, computers and third-party software into a unified environment.

The Visualization and Annotation stage uses EaseLAN, an extended version of the ELAN data annotation software. It comprises the semi-automatized process of NEEM-Narrative creation for human data.

The Recognition stage integrates several automatic classification and recognition components that are based on Models of Human Activity from multiple modalities, such as speech, video, motion capture, muscle, and brain activities.

In the Conversion stage, data is arranged into a NEEM compatible format and automatically uploaded into the NEEM Hub. Once generated, the NEEMs become available for the robot, either through direct query of individual episodes or indirectly through a generative model which generalizes the available NEEMs.

You can find more details in our overview paper on the Human Activities Data Analysis Pipeline (PDF) presented at IROS 2020.

See also the video about the achievements in Research Area H at csl.uni-bremen.de/.

We have developed AMEvA (Automated Models of Everyday Activities), a computer system that can observe, interpret, and record fetch-and-place tasks and automatically abstract the observed activities into action models, and represent these models as NEEMs. These NEEMs can be used to answer questions about the observed human activities and learn generalized knowledge from collections of NEEMs.

For example, a human is performing fetch-and-place tasks in a virtual kitchen environment. An interpreter tightly interacting with the physics engine of the virtual environment is detecting force dynamic events, such as the hand making contact with the object to be picked up, the object being lifted off the supporting surface, and so on. These events are processed by an activity parser in order to recognize actions and segment the actions into motions phases. The parse trees of activities are then converted into NEEMs, which are compatible with the NEEMs collected by robots and linked to the same ontology.

For further details, take a look at the NEEM-Handbook (PDF).