EASE Research Areas

The research activities of the CRC EASE are structured into three main Research Areas (H, P and R). Each Research Area consists of several subprojects. On the following page you find an overview of the subprojects, their research focus, and leaders (Principal Investigators).

Further subprojects include the Project Management and Central Services (Z) for the administration and coordination of the CRC as a whole, the Integrated Research Training Group (MGK), the Information Infrastructure (INF) for the storage, management, and maintenance of the data generated by EASE, and the Laboratory Infrastructure Support (F) for the establishment of the EASE central robotics lab.

Research Area H

Descriptive models of human everyday activity

Research Area P

Principles of information processing for everyday activity

Research Area R

Generative models for mastering everyday activity and their embodiment

The goal of Research Area H is to understand why and how humans can perform vague instructions for everyday activities efficiently and respond quickly and flexibly to new situations. It investigates hypotheses about the form and role of Pragmatic Everyday Activity Manifolds (PEAMs) in competent human everyday manipulation tasks. Its aim is to design computational mechanisms that achieve such efficiency and are as adaptive as human activities.

- H01: Sensory-motor and Causal Human Activity Models for Cognitive Architectures

Focus on the causal sensory-motor models that are encapsulated in long-term memory. Impact of the causal modelling by its predictive power, its inherent generalization capabilities, and its potential for explanation, on three levels of operation.

Subproject Leaders (PI): Prof. Dr. Kerstin Schill, Prof. Dr. rer. nat. Vanessa Didelez, Dr. Christoph Zetzsche - H03: Discriminative and Generative Human Activity Models for Cognitive Architectures

Development of hybrid discriminative and generative models of human activity (exploiting context-free grammars and probabilistic action units and deep multi-modal networks) to identify new ways of describing the temporally-extended hierarchical organization of motion primitives that comprise complex actions.

Subproject Leaders (PI): Prof. Dr. Kerstin Schill, Prof. Dr. Tanja Schultz - H04: Decision Making for Cognitive Architectures – Neuronal Signatures and Behavioral Data

Planning of modeling human learning and decision-making processes to inform a cognitive robot about

what decisions are necessary and when they are necessary to master complex everyday activities,

drawing on human abilities to generate flexible context-sensitive behaviour.

Subproject Leaders (PI): Prof. Dr. Tanja Schultz, Prof. Dr. Manfred Herrmann, Prof. Dr. rer. nat. Bettina von Helversen

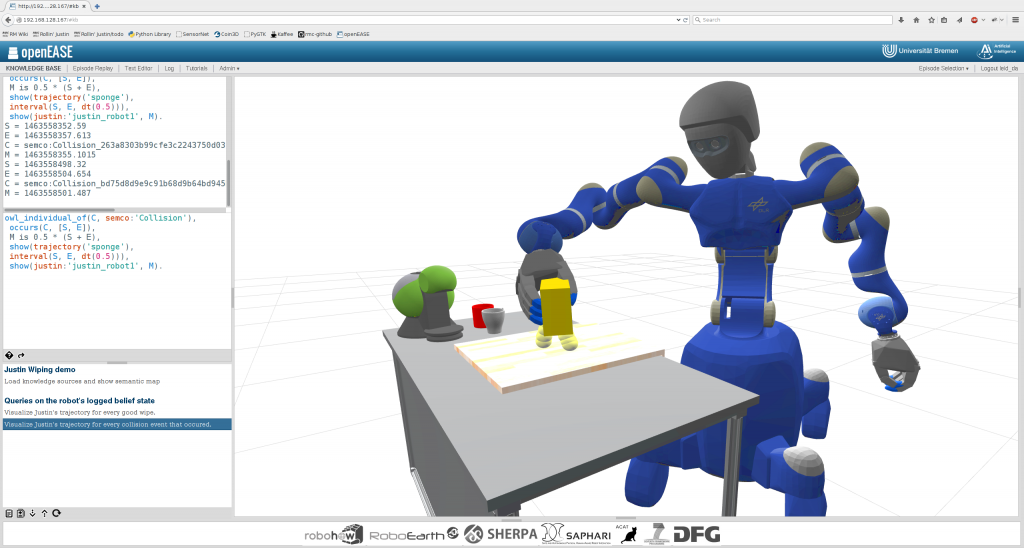

The goal of Research Area P is to understand the representation and reasoning foundations of information processing methods that enable robotic agents to master everyday activities. The knowledge about everyday activity from Research Area H needs to be represented such that it is useful to artificial systems. Research Area P investigates the representational foundations, reasoning techniques and formalizations of Narrative-enabled Episodic Memories (NEEMs), and common knowledge and plans for mastering everyday activities. Its aim is to design representation and reasoning mechanisms that capture the intuitions behind human acitivies. It uses information from Research Area H while at the same time providing feedback to Research Area H.

- P01: Embodied semantics for the language of action and change

(Aktionsbasierte Semantik für die Sprache von Handlung und Wirkung)

Investigation of simulated-based semantics for action steps (based on the respective action verb) that constitute the atomic steps of narratives and creation of logical formalizations of compound narratives.

Subproject Leaders (PI): Prof. Dr. John Bateman, Prof. Dr. Rainer Malaka. - P02: Ontologies with Abstraction

Investigation of the fundamental trade-off between expressiveness and tractabiity in ontological reasoning by identifying PEAMs for reasoning that have just the right expressiveness.

Subproject Leaders (PI): Prof. Dr. Carsten Lutz, Prof. Dr. John Bateman. - P05: Principles of Metareasoning for Everyday Activities

Investigation and Design of an adaptive internal query answering mechanism that can employ hybrid

methods. Examination and Provision of mechanisms for the contextual anticipation of ensuing reasoning tasks so that they can be completed without compromising other ongoing tasks.

Subproject Leaders (PI): Prof. Dr. John Bateman, Prof. Dr. Rainer Malaka.

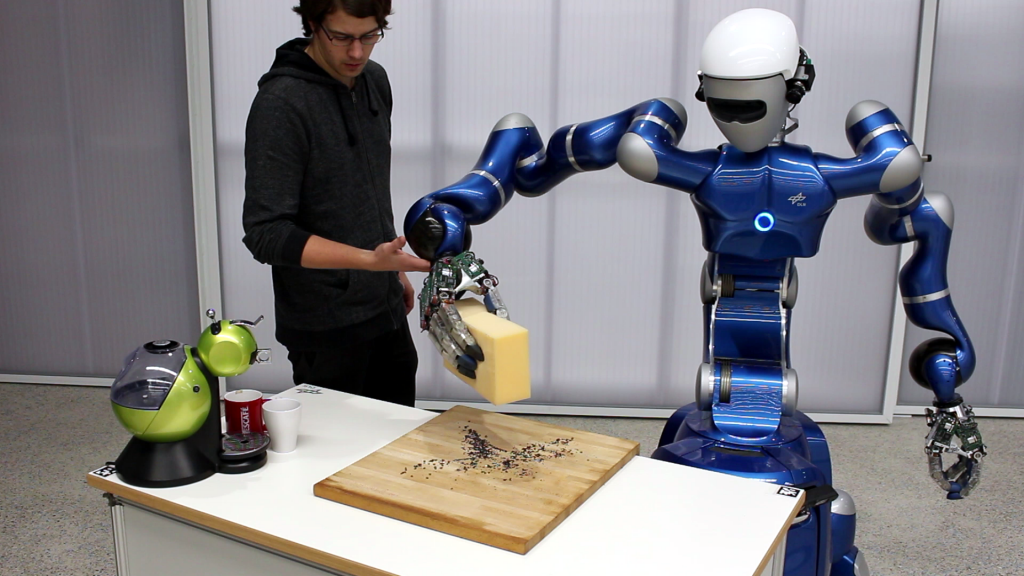

The goal of Research Area R is to investigate a control framwework including perception, learning, and reasoning mechanisms that enable robotic agents to master human-scale everyday manipulation tasks. It investigates the information processing infrastructure necessary for robotic agents to take vague task descriptions and use information about the task, situational context, and object context to perform the task appropriately. This infrastructure will improve with experience (NEEMs) and by exploiting the routine and mundane character of everyday activity tasks by making use of the PEAMs investigated in the Research Areas H and P. Simultaneously, the findings from Research Area R are fed back to both Research Area hH and P.

- R01: CRAM 2.0 — a 2nd generation cognitive robot architecture for accomplishing everyday manipulation tasks

Identification, design, and implementation of the representations and processes required to realize the

situation model framework for flexible and context-sensitive cognitive behavior. Development of a deep causal learning and inference framework on action sequences and of an affordance detection on interacted objects.

Subproject Leaders (PI): Prof. Dr. h.c. Michael Beetz PhD, Prof. Dr. Gordon Cheng, Prof. David Vernon. - R02: Multi-cue perception based on background knowledge

(Hintergrundwissen für Multi-Komponenten-Perzeption)

Investigation of the efficient and robust accomplishment of selected challenging perception problems in the context of everyday activity that require common knowledge and the exploitation of PEAMs.

Subproject Leader (PI): Prof. Dr. Udo Frese. - R03: A knowledge representation and reasoning framework for robot prospection in everyday activity

Investigation of prospection in robot agents by designing, developing, and studying a knowledge representation and reasoning (KR&R) framework for prospection that includes and maintains different forms and modes of prospection.

Subproject Leaders (PI): Prof. Dr. Gabriel Zachmann, Prof. Dr. h.c. Michael Beetz PhD. - R04: Cognition-enabled execution of everyday actions

Design and realization of a cognition-enabled, plan-based, and context-aware execution component, i.e. an action executive, that will continuously monitor and spontaneously & smoothly intervene in the action execution to maximize success and efficiency.

Subproject Leaders (PI): Prof. Dr. h.c. Michael Beetz PhD, Prof. Dr. Alin Albu-Schäffer. - R05: Episodic memory for everyday manual activities

(Episodische Gedächtnisse für manuelle Alltagsaktivitäten)

Investigation of how collections of NEEMs of everyday manual actions can be obtained for robots and how they can be used to bridge between semantic, procedural, and perceptual memories in order to support competent and dexterous manipulation in everyday contexts.

Subproject Leaders (PI): Prof. Dr. Helge Ritter, Prof. Dr. h.c. Michael Beetz PhD. - R06: Fault-Tolerant Manipulation Planning and Failure Handling

Development of suitable failure representations that are able to encode failure situations as well as resulting state changes on-line in order to recover appropriately. Design of inference strategies that enable a robot not only to recognize that a failure has occurred, but also to recognize possible consequences in the form of undesirable effects in order to correct them subsequently. Implementation of AI-based reasoning and failure recovery mechanisms.

Subproject Leaders (PI): Dr. Daniel Leidner.

Pictures taken at the Automatica 2018 in Munich: Human demonstrations in a virtual environment. Visitors could put on VR glasses and move around in a virtual kitchen. 3D Modelling is used in several Research Areas.

Photo Credits: Wibke Borngesser (TUM).