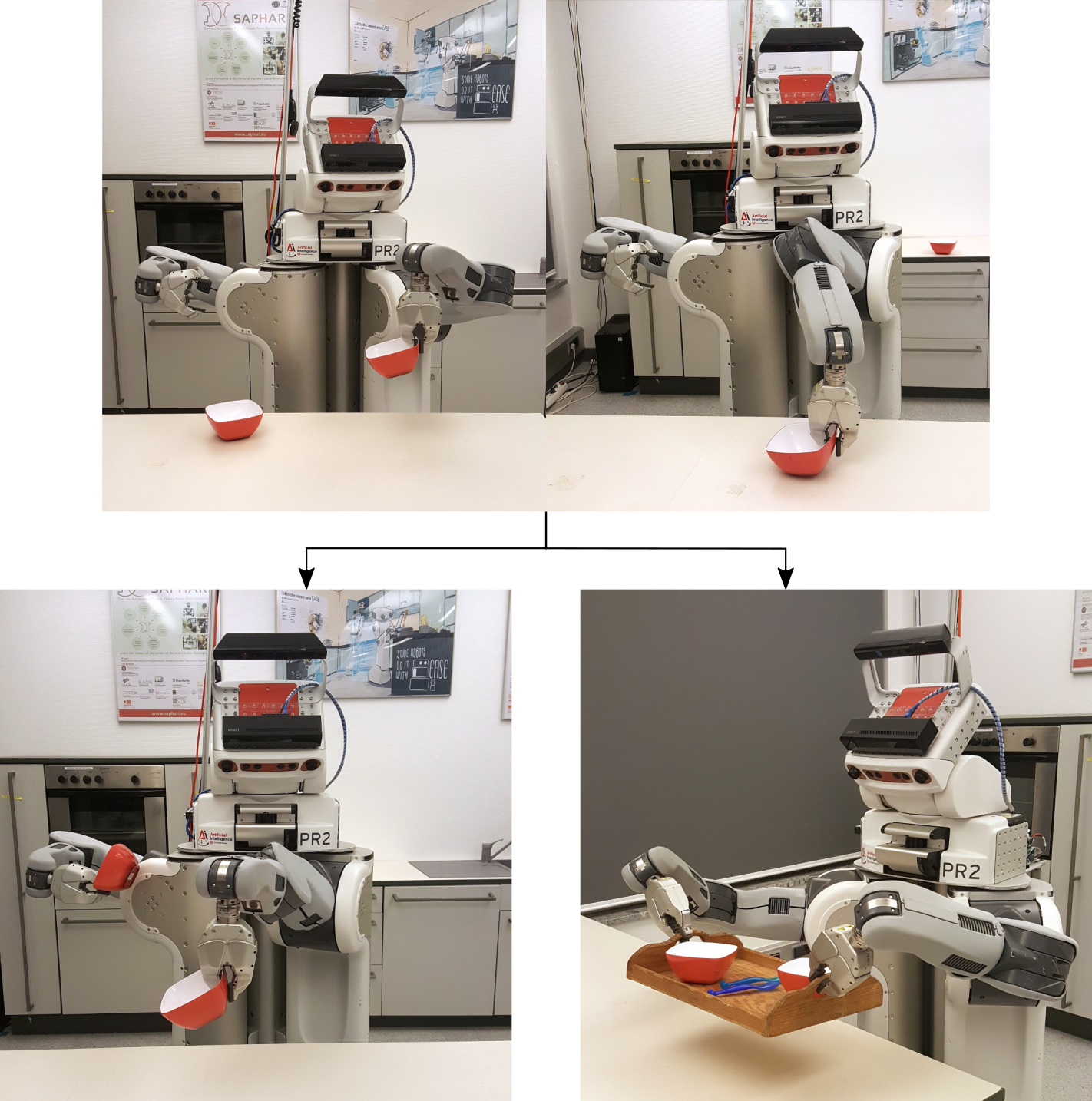

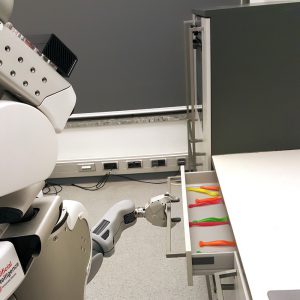

Autogenerated or preprogrammed action plans for robots are not always optimal with respect to the given situation at hand. There may exist opportunities to optimize the behavior of the robot with respect to a certain cost, e.g., execution time, by utilizing unused resources of the robot or the environment. For example, if the robot has more than one arm, small objects that are grasped with one hand can be transported together using multiple arms. Another example is to use a tool to aid with execution, such as using a tray to transport multiple objects at a time (see the Figure above).

In general, the reason why a behavior may leave free resources of the robot and the environment unused is that many of the exact parameters of the actions, such as object size, weight, exact location, location of other obstacle objects in space, are unknown at the time when the robot behavior specification is created. Thus, the robot needs to be able to change its course of action during execution, adapting its behavior in accordance to the current situation at hand.

We present an approach to improve real-world robot plan execution and achieve better performance by autonomously transforming robot’s behavior at runtime. Transformational planning [1], which we apply in our framework, is a very powerful technique for autonomously changing robot’s course of action. An example transformation rule for the domain of mobile pick and place would be: “if the goal is to take two objects out of the same container, transform your original plan by omitting the first container closing action, as well as the consequent opening action”. Our framework shows that substantial changes in action strategies, such as in the examples above, can be achieved in real-world mobile manipulation plans by plan transformations, without introducing bugs.

In the state of the art, there have been a number of endeavors to change the order of actions in the plan and the corresponding parameters, in order to improve performance (e.g., [2], [3], [4], etc.). However, most were applied to plans of robots acting in simulated environments, which are much simpler than real-world plans, as the real world is only partially observable and non-deterministic. Our framework is applied to real-world plans, and our approach to implement plan transformations is novel in comparison to the related work.

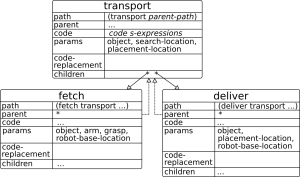

Plan structure and task trees

A plan in our system consists of a sequence (ordered, partially ordered or concurrent) of calls to perform actions, often secured by failure handling mechanisms for achieving reliable behavior. Performing an action within a plan is in its turn implemented through calling its respective subplan. For example, transporting an object from one location to another is implemented through the sequence of actions of searching for the object, fetching it and delivering, while fetching an object involves positioning the robot in the reach of the object, then grasping and lifting it. If the object cannot be found within the camera view of the robot or if it is unreachable from the current location, failure recovery strategies command the robot to reposition itself, try grasping with another arm, etc. With these hierarchical plans and failure handling constructs, we are able to construct complex scenarios of robot activities.

To analyze the robot’s behavior and transform it in the scope of any scenario and context, we make use of the task tree data structure, introduced in our previous work [5]. The task tree is the runtime representation of the executed plan, containing all plan-relevant information, including the parameters the plans were called with. At runtime, for each executed action a node is automatically created in the task tree, containing references to its children nodes for every subplan executed within the plan. The top level plan generates the root node of the tree, and the leaves are created, when atomic motions are executed (see the Figure below).

We make use of the feature of the task tree that it persists between multiple executions of the same plan program code. Thus, during the second execution of the plan, its task tree is not constructed anew but the old one is updated with the new plan arguments used in the current execution. Therefore, transforming a task tree and re-executing its plan results in a transformed behavior on the robot. This builds the basis of our plan transformation approach: the robot first executes the plan in a fast plan projection simulator [6], then examines the generated task tree, applies the corresponding transformations and then executes the same plan in the real world, resulting in altered behavior. As plan projection is much faster than realtime (about 50 times faster), this process does not significantly delay execution.

Transformation rules

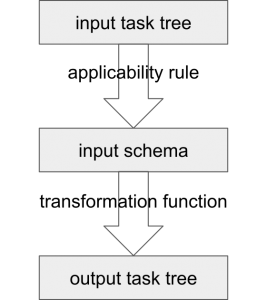

The Figure below illustrates the general pipeline of our plan transformation framework:

First, a plan is executed in a plan projection simulator, whereby an input task tree is generated. The applicability rule determines if a transformation is suitable for the given input task tree, traversing the tree via Prolog predicates and searching for patterns that can be used in a transformation. Additionally, the applicability rule extracts information from the relevant nodes of the tree, such as node paths and relevant plan parameters, which constitutes the input schema of the transformation. The applicability rule checks for both structural and semantic applicability of a transformation. The input schema is subsequently passed to the transformation function, which is a function that sets the code-replacement slots of nodes in the task tree, by assigning them a different code to execute instead of the original one. When a plan is executed, the code-replacement slot of the node corresponding to the currently active subplan is examined, and, when present, instead of the original code of the subplan, the given code replacement is executed. By changing the content of single nodes instead of producing a

completely new plan, we can modify the task tree in various ways without affecting the original plan, since the effect of applied transformations only manifests itself at runtime.

Below we describe three example transformations for the fetch and place domain that we have implemented to demonstrate our framework. The transformations differ from one another by the resources of the robot and the environment that they utilize for improving execution performance as well as by the effect that they have on the task tree.

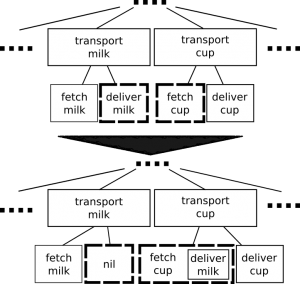

Transporting objects with both hands

The first transformation allows to improve parts of a general plan that transport two objects in a row with one hand each time into transporting both objects at once. We call it both-hands-transform. It extracts two actions of type transporting from the task tree, which satisfy the applicability rule outlined below, then switches the order of their fetching and delivering actions. Whereas in the original plan the robot first fetches one object and delivers it immediately, in the transformed plan the delivery of the first object is delayed and instead executed after the second object has been fetched (see the Figure below).

This transformation requires at least two transporting actions to be present in the plan. Fetching locations as well as delivering locations of both objects have to be the same symbolic location, otherwise the robot could navigate a long distance, only to use his second hand. The transporting actions in the original plan have to be performed by one arm. All of this is considered in the applicability rule.

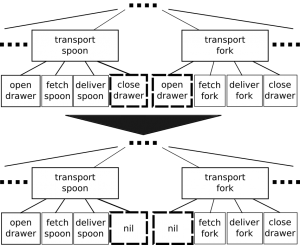

Avoiding redundant environment manipulation

This transformation, called container-transform, removes redundant container opening and closing actions. It can be applied to plans that include multiple transporting actions, whose fetching location describes a location inside a container. The Figure below shows the container-transform being applied on a task tree for fetching a spoon and a fork from a drawer.

In contrast to the both-hands-transform, the transformation function of container-transform does not take the first set of bindings generated by the applicability rule, but a list of all possible bindings, i.e. a list of all transporting actions that share the same container is generated. Thus, if there are multiple containers and from each of them multiple transports take place, the function distinguishes between the different containers and handles them separately, such that each container is opened and closed exactly once.

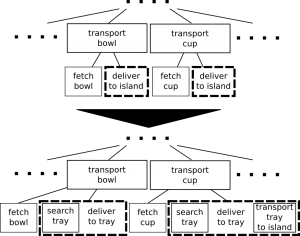

Transporting objects with a tray

Whereas both-hands-transform transforms the plan to carry two objects at once, more than two objects can be transported by using a tray. The Figure below illustrates tray-transform on a task tree for transporting a bowl and a cup (only two objects are used in the Figure for better readability).

The applicability rule of the tray-transform is a simplified version of both-hands-transform, since both transformations require at least two transporting actions, which fetch and deliver objects to similar locations correspondingly. As the tray-transform does not consider fetching actions and only requires the paths and action descriptions of the deliver tasks, the applicability predicate gets simplified. The tray-transform redirects the delivery locations of all applicable transporting actions to a location on the tray.

Results & Future Work

With the outlined system, we have shown that transformation techniques can be realized on plans designed for robots acting in the real world by using code replacements. For utilizing the system on the robot, a plan is first projected, then automatically transformed and, finally, executed right away in the real world to produce more efficient behavior.

We evaluated our approach on a large amount of experiments in a fast plan projection simulator to show that execution performance is improved in a statistically significant manner. We also transformed a real-world table setting and cleaning plan to demonstrate the feasibility of the approach (see the Figures below and the Video above).

We are currently moving this research direction forward by considering an implementation, where the structure of the task tree can also be altered, i.e. nodes can be added and removed from the task tree.

For more details on the described system, we kindly ask you to take a look at the relevant publication.

Relevant publication:

Gayane Kazhoyan, Arthur Niedzwiecki and Michael Beetz, “Towards Plan Transformations for Real-World Mobile Fetch and Place”, In ICRA, 2020.

Access it here: https://arxiv.org/abs/1812.08226

Citations:

[1] D. McDermott, “Robot planning,” AI magazine, vol. 13, no. 2, pp. 55, 1992.

[2] D. Hadfield-Menell, L. P. Kaelbling, and T. Lozano-Pérez, “Optimization in the now: Dynamic peephole optimization for hierarchical planning,” in ICRA, 2013.

[3] A. Müller, A. Kirsch, and M. Beetz, “Transformational planning for everyday activity.” in ICAPS, 2007.

[4] S. Bothelho and R. Alami, “Robots that cooperatively enhance their plans,” in Distributed Autonomous Robotic Systems 4, Proceedings of the 5th International Symposium on Distributed Autonomous Robotic Systems, DARS, 2000.

[5] L. Mösenlechner, N. Demmel, and M. Beetz, “Becoming action-aware through reasoning about logged plan execution traces,” in IROS, 2010.

[6] G. Kazhoyan and M. Beetz, “Executing underspecified actions in real world based on online projection,” in IROS, 2019.